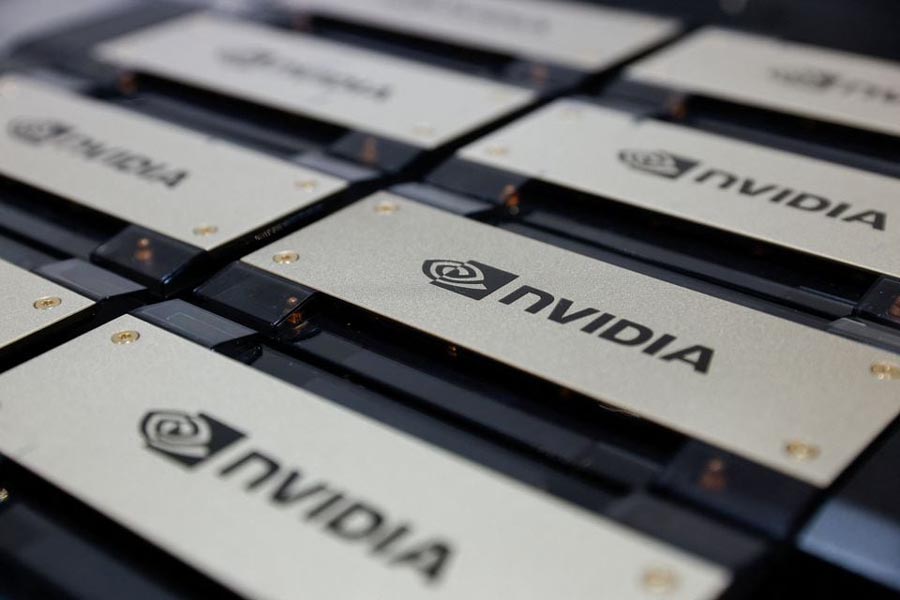

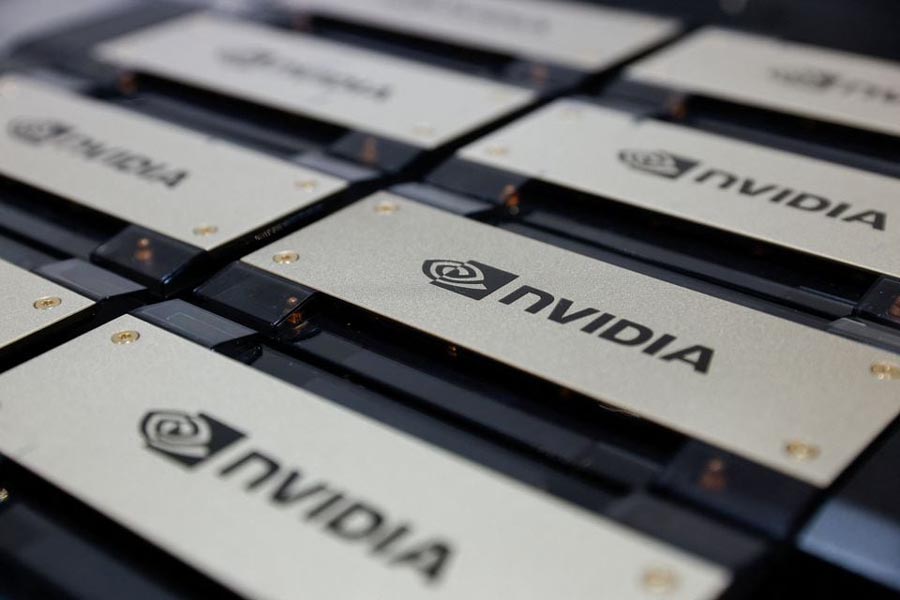

On Monday, Nvidia introduced additional features to its leading artificial intelligence chip, announcing that the enhanced product will begin its rollout next year in collaboration with Amazon, Alphabet Inc., Google, and Oracle.

The H200, as the chip is called, will overtake Nvidia's current top H100 chip. The primary upgrade is more high-bandwidth memory, one of the costliest parts of the chip that defines how much data it can process quickly, according to a Reuters report.

Nvidia dominates the market for AI chips and powers OpenAI's ChatGPT service and many similar generative AI services that respond to queries with human-like writing. The addition, of more high-bandwidth memory and a faster connection to the chip's processing elements, means that such services will be able to spit out an answer more quickly.

The H200 has 141 gigabytes of high-bandwidth memory, up from 80 gigabytes in its previous H100. Nvidia did not disclose its suppliers for the memory on the new chip, but Micron Technology said in September that it was working to become a Nvidia supplier.

Nvidia also buys memory from Korea's SK Hynix, which said last month that AI chips are helping to revive sales.

Nvidia on Wednesday said that Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to offer access to H200 chips, in addition to speciality AI cloud providers CoreWeave, Lambda and Vultr.

For all latest news, follow The Financial Express Google News channel.

For all latest news, follow The Financial Express Google News channel.